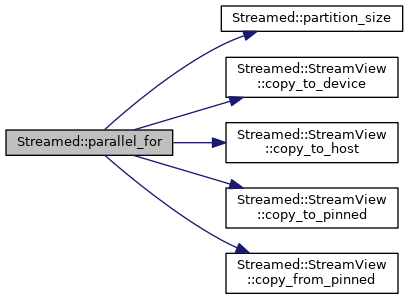

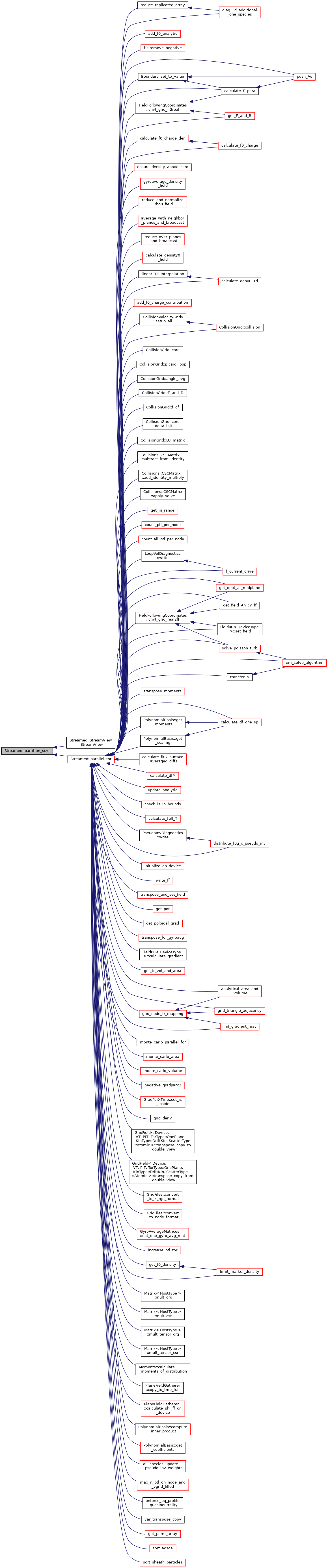

The streamed parallel_for creates 3 GPU streams and uses them to asynchronously transfer data to and from the GPU while executing. This is done by splitting up the data into chunks. Steps are blocked so that the 3 streams are never operating on the chunk at the same time. Empirically, the host memory must be pinned in order to achieve fully asynchronous data transfer. Pinned memory is optional; if enabled, two extra tasks per step are added: a preliminary step where data is copied into pinned memory, and a final step where returning data is copied from pinned memory.

The smaller the chunk size, the more overlap occurs. However, if it is too small then the device will not be saturated and performance will degrade.

The streamed parallel_for should take the following amount of time to finish N chunks, given execution time E and one-way communication time C per chunk: T = max(E,C)*N + 2*C If the send or return is absent, just need: T = max(E,C)*N + min(E,C) If the device is saturated so E = E_ptl*ptl_per_chunk, then in the limit of large n_ptl: T -> max(E_ptl, C_ptl)*n_ptl

- Parameters

-

| [in] | name | is the label given for the kokkos parallel_for |

| [in] | n_ptl | is the number of particles |

| [in] | func | is the lambda function to be executed |

| [in] | option | controls an option for the streamed parallel_for to exclude send or return |

| [in] | aosoa_h | is the host AoSoA where the data resides |

| [in] | aosoa_d | is the device AoSoA that will be streamed to |

- Returns

- void